In our previous post, I walked you through how to implement Incremental Static Regeneration (ISR) in Astro using both the Cache-Control header and a custom middleware. In this post, I will expand our implementation to make it a bit more robust and add support for on-demand revalidation.

If you haven't read the post yet, I highly recommend that you do so before continuing. You can find it here.

What is On-Demand Revalidation?

You might be wondering what on-demand revalidation is and why we might need it when doing ISR. On-demand revalidation is a feature that allows us to revalidate a page on demand, instead of waiting for the revalidation time to pass. Sometimes, this is also referred to as "manual revalidation."

Why Do We Need On-Demand Revalidation?

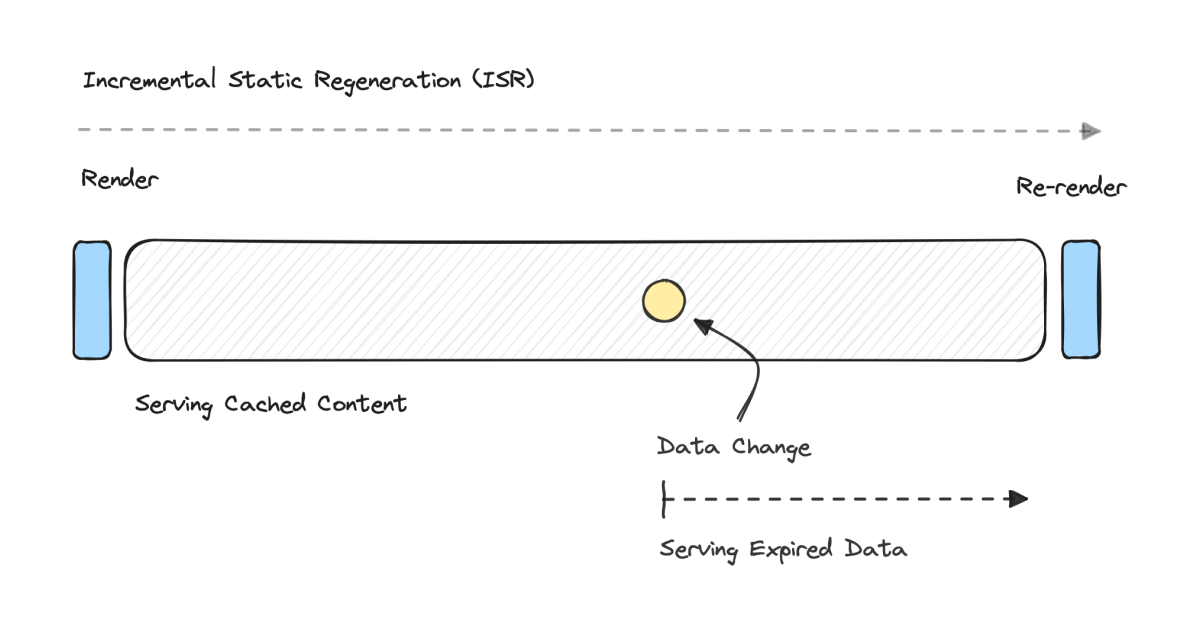

To better understand why we might need on-demand revalidation, let's take a look at how ISR works. The following diagram shows the timeline of a page that is served using ISR.

As you can see, we have an initial render that is done when the page is first requested. After that, we have a revalidation time during which the page will be served from the cache. Once the revalidation time has passed, the page will be re-rendered, and the cache will be updated.

The issue here is that if we have a data change somewhere in the middle of the revalidation time, we will still serve the old page from the cache until the revalidation time has passed. This means that we will have to wait for the revalidation time to pass before we can serve the new page.

This could be a problem if we have long revalidation times and want to make sure we serve the new page as soon as possible. On the other hand, if we have short revalidation times, we will be re-rendering the page more often than we need to. This could get expensive if our pages are complex and take a long time to render.

We can solve this problem by implementing on-demand revalidation. Let's take a look at how that works.

How Does On-Demand Revalidation Work?

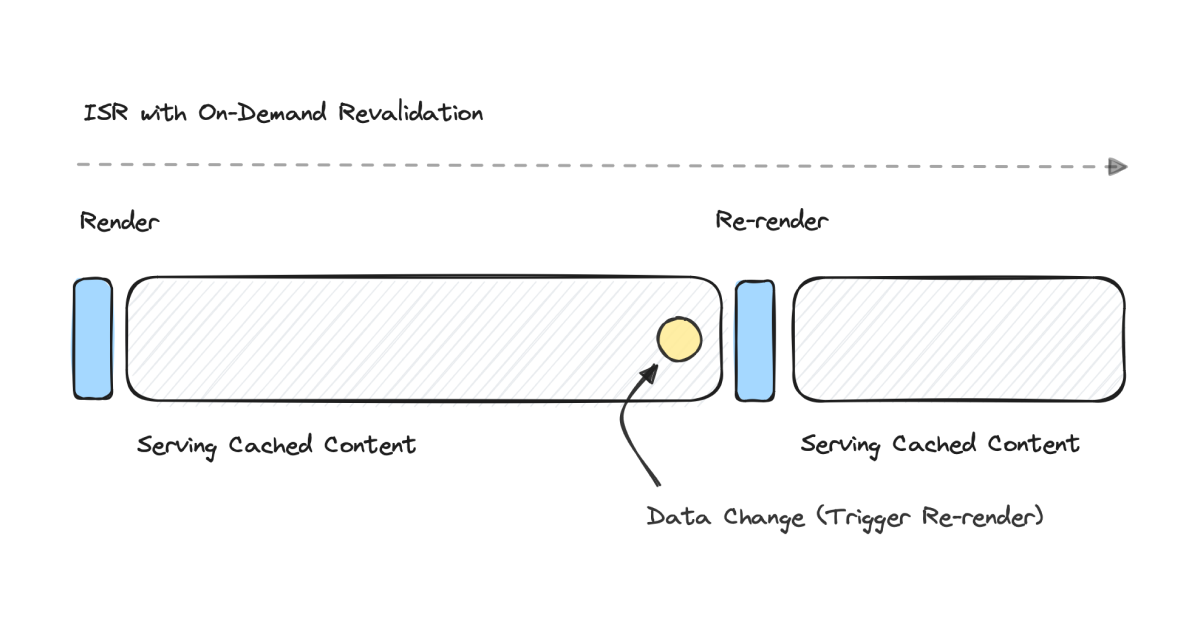

On-demand revalidation, a small addition to ISR, is a mechanism that allows us to trigger the revalidation of a page's cache whenever there is an update to the underlying data, rather than waiting for a predetermined interval to elapse. Let's take a look at how this works.

This diagram is very similar to the previous one, but it has one key difference. Instead of waiting for the revalidation time to pass, our data change triggers a revalidation of the page's cache. This means that we can serve the new page immediately after the data change has been made, regardless of the revalidation time.

This is a very simple change, but it can have a big impact on the performance of our website and the user experience. Let's now take a look at how we can implement this in Astro.

How to Implement On-Demand Revalidation

Implementing on-demand revalidation is actually quite simple. Previously, we created an in-memory cache that we used for storing our responses and the expiration time for each page. What we need to do now is to add a new global method that would allow us to purge specific pages from the cache.

Once we have this method, we can call it from anywhere in our codebase to purge a page from the cache. This will cause the page to be re-rendered the next time it is requested, regardless of the revalidation time.

Let's take a look at how we can implement this in Astro.

Implementing a New Cache

First, let's improve the cache that we created in our previous post. I will move the cache into its own file in src/services/cache.ts and create an interface for it. This will allow us to easily swap out the cache implementation in the future if we need to.

Next, we will create a new class that implements this interface using a simple Map. This will allow us to store our responses in memory and easily purge them when needed. I will also add a background task that will run every minute and purge any expired pages from the cache to prevent it from growing too large. We can, of course, implement this cache using Redis, Memcached, or any other caching solution if we need to.

Implementing a New Incremental Static Regeneration Service

Now that we have a new cache implementation, we can move our ISR service into its own file in src/services/isr.ts. What I'm going to do here is a bit over-engineered, but bear with me for a second. I am going to create a new class that implements the Cache interface that we created earlier. However, it will take an instance of the Cache interface as a constructor argument and will delegate all of the cache operations to it. This will allow us to handle the cloning of the responses and also log all of the cache operations.

Let's create a new instance of this class and export it so that we can use it in our middleware later on.

Updating Our ISR Middleware

With our ISR service ready to go, it's time to update our middleware. First, I'm going to update the onRequest method to use our new ISR service instead of the Cache-Control header. This will allow us to use the Cache-Control header for other things, such as caching static assets.

Additionally, I'm adding a new function to define cases where we want to skip the cache. For now, I'm going to skip non-GET requests, but we can add more cases later on if we need to.

Perfect! Now we have a new ISR service that we can use to implement on-demand revalidation. Let's take a look at how we can do that.

Implementing On-Demand Revalidation

To get started, I'm going to add a little syntactic sugar to our ISR service called invalidate that will allow us to purge a page from the cache. All this function will do is call the del method on our cache.

We can now use this function from anywhere in our codebase to purge a page from the cache.

Adding a Button to Invalidate the Cache

For the sake of this example, I'm going to add a new button to our time.astro page that will allow us to invalidate the cache and cause the page to be re-rendered. This button will simply make a POST request to the same page and will call the invalidate function that we created earlier.

We can now test this out by running our application and clicking on the button. If everything is working correctly, we should see the time change immediately after clicking on the button.

Conclusion

On-demand revalidation is a great feature that can help us improve the performance of our website and the user experience. It allows us to revalidate a page's cache whenever there is an update to the underlying data, rather than waiting for a predetermined interval to elapse. This means that we can serve the new page immediately after the data change has been made, regardless of the revalidation time.

I found it very easy to extend this implementation to support various use cases, such as blog posts, product listings, or any content that requires frequent updates.

Overall, this is a very simple change, but it can have a big impact on the performance of our website and the user experience. I hope that you found this post useful and that it helped you understand how to implement on-demand revalidation in Astro.

Interested in LogSnag?